In this blog post we will discuss how to use custom models in OAC. We will walk-through the process of developing python scripts compatible with OAC, to train and apply a model using your own Machine Learning algorithm.

At the time of this blog, Oracle Analytics Cloud (OAC) is shipped with more than 10 Machine Learning algorithms which fall under Supervised (Classification, Regression) and Unsupervised(Clustering) Learning. List of inbuilt algorithms in OAC include CART, Logistic Regression, KNN, KMeans, Linear Regression, Support Vector Machine and Neural Networks (for an exhaustive list of inbuilt algorithms in OAC please refer to our earlier blog post). These inbuilt algorithms cover majority of the real world business use cases. However sometimes users are faced with cases/datasets where they need use to use a different algorithm to achieve their goal.

Oracle Analytics-Library has an example for custom Train/Apply Model using Support Vector Regression (SVR). Please refer that sample to know more about the required XML structure before proceeding further. We'll use that sample to walk through these script parts.

Model-Train-Script:

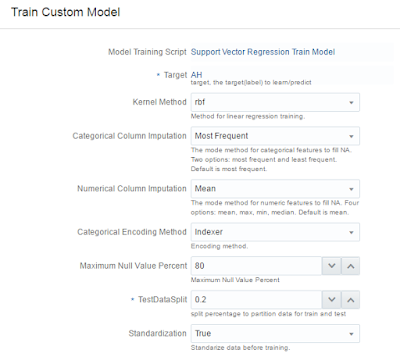

1) Capturing Parameters:

Data is typically prepared and pre-processed before it is sent for Training a model. Pre-processinginvolves filling missing values, converting categorical values to numerical values (if needed) and

standardizing the data. The way this data pre-processing is done can influence the accuracy of a

model to a good extent. In OAC users are provided with parameters in Train Model UI to

choose/influence the methods to be used for pre-processing. Also through the same UI users are

provided a bunch of options to tune their model. All the parameters sent from this User Interface

need to be captured before we start processing the data. For example in Train Model script for SVR

following snippet of code reads all the parameters:

## Read the optional parameters into variables

target = args['target']

max_null_value_percent = float(args['maximumNullValuePercent'])

numerical_impute_method = args['numericalColumnsImputationMethod']

...

2) Data Pre-processing(optional):

Before we train a model data needs to be cleansed and normalized if necessary to get betterprediction. In some cases Training data may already be cleansed and processed and be ready for

training. But if the data is not cleansed and prepared user can define their own functions to

perform the cleansing operation or use inbuilt methods in OAC to perform these operations.

Following blog discusses in detail how to use inbuilt methods in OAC to perform

cleanse/prepare the data for Training a model.

3) Train/Create Model:

Now we are ready for actually training the model. Train Model process can be sub-divided into 2

steps: 1) Splitting the data for testing the model 2) Train the model which contain model

performance/accuracy details.

Train-Test split: It is a good strategy to keep aside some randomized portion of the Training data

for testing. This portion of data will be used for evaluating the model performance in terms of

accuracy. Amount of data to be used for Training and Testing is controlled by a user parameter

called split. And there is an inbuilt method for performing this split in a randomized fashion so as

to avoid any bias or class imbalance problems. Following snippet of code performs this Train-Test

split:

# split data into test and train

train_X, test_X, train_y, test_y = train_test_split(features_df, target_col, test_size=test_size,

random_state=0)

Train Model: Now we have datasets ready for Training and testing the model. It's time to train the

model using inbuilt train methods for that particular algorithm. fit() is the inbuilt method for most

of the algorithms implemented in python. Following snippet of code does that for SVR algorithms:

# construct model formula

svr = SVR(kernel=kernel, gamma = 0.001, C= 10)

SVR_Model = svr.fit(train_X, train_y)

4) Save Model:

Model that we created in the previous step needs to be saved/persisted so that it can be accessedduring Apply/Scoring Model phase.

Save Model as pickle Object: Created models are saved/stored as pickle objects and they are re-

accessed during Apply model phase using reference name. There are inbuilt methods in OAC to

save the model as pickle object. Following snippet of code saves the model as pickle object:

# Save the model as a pickel object. And create a reference name for the object.

pickleobj={'SVRegression':SVR_Model}

d = base64.b64encode(pickle.dumps(pickleobj)).decode('utf-8')

In this case SVRRegression is the reference name for the model created. The pickle doesnt have to

be just the model. In addition to the model other information and objects can also be saved as pickle

file. For example if wish to save additional flags standardizer indexes along with the model, you

can create a dictionary object which contains the model and the flag/indexer and save this entire

dictionary as a pickle object.

5) Add Related Datasets (optional): Now that we have the model, let us see how well this model

performs. In the previous step we have set aside some part of the data for testing the model. Now

using that testing dataset let us calculate some accuracy metrics and store them in Related datasets.

This is an optional step and in cases where users are confident about model's accuracy they can skip

this step. However if users wish to view the accuracy metrics or populate them in quality tab they

can use inbuilt methods in OAC to create the related datasets. More information on how to add

these Related datasets can be found in this blog: How to create Related datasets

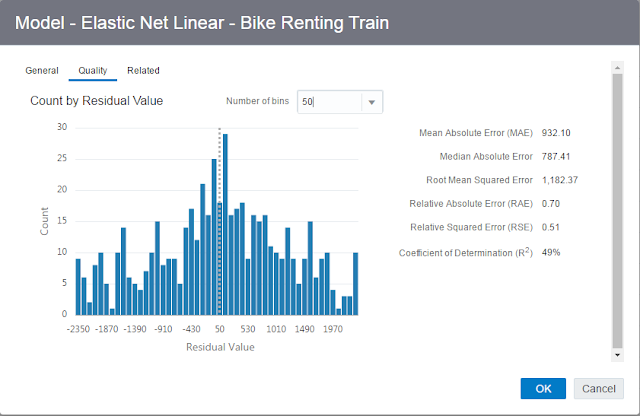

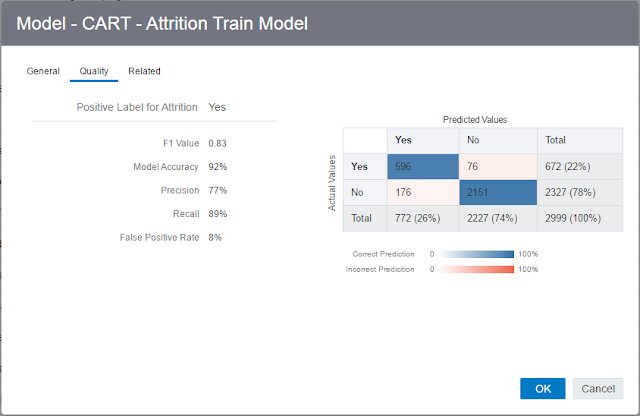

6) Populate Quality Tab (optional): In the model Inspect pane, there is a tab called Quality. This

tab visualizes the model accuracy details. Users can view the quality tab and evaluate the model

accuracy and decide if he/she wants to tune the model further or use it for prediction. Please note

that this is also an optional step and not mandatory. However if users wish to view the model

quality details in Quality tab then they can use inbuilt functions in OAC. More details on inbuilt

functions that populate quality tab can be found in this blog How to Populate Quality Tab.

Model-Apply-Script:

Apply script should have the same name as Train script except for train part i.e. for example it should follow nomenclature: OAC.ML.Algo_Name.apply.xml. Apply script accepts the model name, and other data pre-processing parameters and user parameters as input to the script. Most of the pre-processing steps are same as what we have done in Train Model scripts1) Capturing Parameters

2) Data Pre-processing: Same inbuilt methods can be used for cleansing (filling missing values), Encoding and Standardizing the Scoring data.

After the data is cleansed and standardized it can be used for Prediction/Scoring.

Load Model and Predict:

Using the reference name we gave to the model in Train Script, retrieve the model pickle object and predict the results for Cleansed and Standardized scoring data. Following code in SVR Apply model script does that:

## Load the pickle object that we saved during Train Model phase. It is stored as an element

in dictionary. Fetch it using the reference name given.

pickleobj = pickle.loads(base64.b64decode(bytes(model.data, 'utf-8')))

SVR_Model=pickleobj['SVRegression']

## Predict values.

y_pred=SVR_Model.predict(data)

y_pred_df = pd.DataFrame(y_pred.reshape(-1, 1), columns=['PredictedValue'])

If includeInputColumns option is set to True, the framework appends the predicted result to input columns and return the complete dataframe.

This concludes the process of developing scripts for Train and Apply of Custom models.

Related Blogs: Prepare data using inbuilt functions in OAC, How to add Related Datasets, How to Populate Quality Tab

Are you an Oracle Analytics customer

or user?

We want to hear your story!

Please voice your experience and provide feedback

with a quick product review for Oracle Analytics Cloud!